>_

Description

BACKGROUND

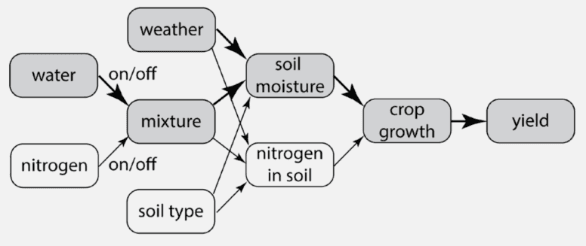

Trust is an essential prerequisite of adopting AI-based solutions in next generation food systems. Explainability has been identified as one key component in increasing both users’ trust and their acceptance of AI-based solutions. Furthermore, explainability justifies an AI’s decisions and enables them to be fair and ethical. Reinforcement learning (RL) will play a key role in solving control and decision-making problems in agricultural production and food processing/distribution as well as genotype selection problems in molecular breeding. Our proposed XRL framework can access a simulator/model of the food system under control and a list of external and internal variables relevant to the system. A domain expert will provide these variables.

GOALS

- A structural causal model (SCM) that describes the relationships between the variables (listed by the expert) will be learned from the RL agent’s interaction with the simulator.

- The RL agent will learn a control policy, which both maximizes the expected reward and respects the learned SCM. Our XRL framework will cycle between these two phases until a consistent SCM and control policy have been obtained.

- Our XRL agent can answer human inquiries based on both the learned SCM and control policy. One crucial feature that distinguishes our framework with existing XRL methods is that it can generate counterfactual explanations.

IMPACT

The greatest merit lies at its interface with projects proposed in application clusters, which will greatly multiply the impact of our project (and vice versa) and facilitate the transfer of the methods developed in both foundational and applied AI clusters to the industry.

>_

Photos

>_

Team

Zhaodan Kong

Principal Investigator

Xin Liu

Co Principal Investigator

Ilias Tagkopoulos

Co Principal Investigator

Niao He

Co Principal Investigator

Nitin Nitin

Collaborator

Daniel Runcie

Collaborator

Isaya Kisekka

Collaborator

Mason Earles

Collaborator

>_

Publications